By now it’s evident that artificial intelligence (AI) is the singular most definitive technology of this generation, and it’s powering broad industrial transformation across critical use cases. Enabling this AI-driven transformation hinges on accurate, high performing AI models and model training acceleration.

Ronald van Loon is a NVIDIA partner and had the opportunity to apply his expertise as an industry analyst to explore the implications of MLPerf benchmarking results on the next generation of AI.

Enterprises are facing an unprecedented moment as they strive to leverage AI for competitive advantage. This means optimizing training and inferencing for AI models to gain differentiating benefits, like significantly improved productivity for their data science teams and achieving faster time to market for new products and services.

However, AI is advancing incredibly quickly and AI model size is dramatically increasing in such areas as Natural Language Processing (NLP), which has grown 275 times every two years using the Transformer neural network architecture. For example, NVIDIA recently developed Megatron-Turing NLG 530B, an AI language model with more than 500 billion parameters, a sizable leap forward from the previous record holder for biggest language model, OpenAI’s 173 billion parameter GPT-3.

Training AI for real world applications and use cases is complex, and large-scale training demands unique system hardware and software to underpin specialized performance requirements at scale.

Why Scaling is a Critical Factor in Training AI

AI adoption has demonstrated growing momentum due to the pandemic. A recent study indicates that 52% of businesses increased their AI adoption plans due to this ongoing global event, and 86% state that AI is a mainstream technology for their organization this year.

This adoption is reflected in the enormous variety in AI models and use cases today. In healthcare, AI models are used for medical imaging and diagnostics, as well as molecular simulations for drug discovery. In retail, eCommerce, and the consumer internet, AI models are used for recommendation engines to help customers find relevant products and content. Numerous industries are leveraging Conversational AI to improve customer service and support, which uses AI models to understand, interpret and mimic human speech patterns. Digital twins are being used for simulating and enhancing engineering designs and enable deeper comprehension of highly complicated patterns, like in climate change.

When it comes to actually training AI models, AI scaling is crucial. Many aspects of the process can quickly snowball into bottlenecks and numerous challenges arise, from distributing and coordinating work to moving data. But AI scaling is a critical aspect of training AI:

- AI is advancing at an astonishing pace, with state of the art models doubling in size approximately every 2.5 months, according to OpenAI. It takes a substantial amount of time to train giant models, and it would be impossible to continuously advance AI without the ability to scale.

- AI requires writing software that continually grows, and organizations need the critical ability to quickly iterate alongside the scale.

- The time to train AI models is directly connected to the total cost of ownership and ROI for AI projects.

Though there’s traditionally been a fairly common misconception connecting AI model training and retraining to only the cost of infrastructure and ROI, modern enterprises are also concerned about the productivity of their data science teams and being faster than their competitors in delivering updates to the market. So there’s a fundamental shift happening regarding considerations for AI projects.

Simply put, scaling makes the fastest time to train possible.

MLPerf Training Benchmarks

AI training speed was under the microscope in the latest rendition of MLPerf. MLCommons, an open engineering consortium, recently launched the 5th round for MLPerf Training v1.1 benchmark results. MLPerf began three years ago, and is a benchmark for machine learning (ML), extending across training, inference, and HPC workloads, and including application diversity. It provides an apples-to-apples, peer reviewed comparison of performance with the goal of providing fair metrics and benchmarks to enable a “level playing field” where competition propels the industry forward and drives innovation.

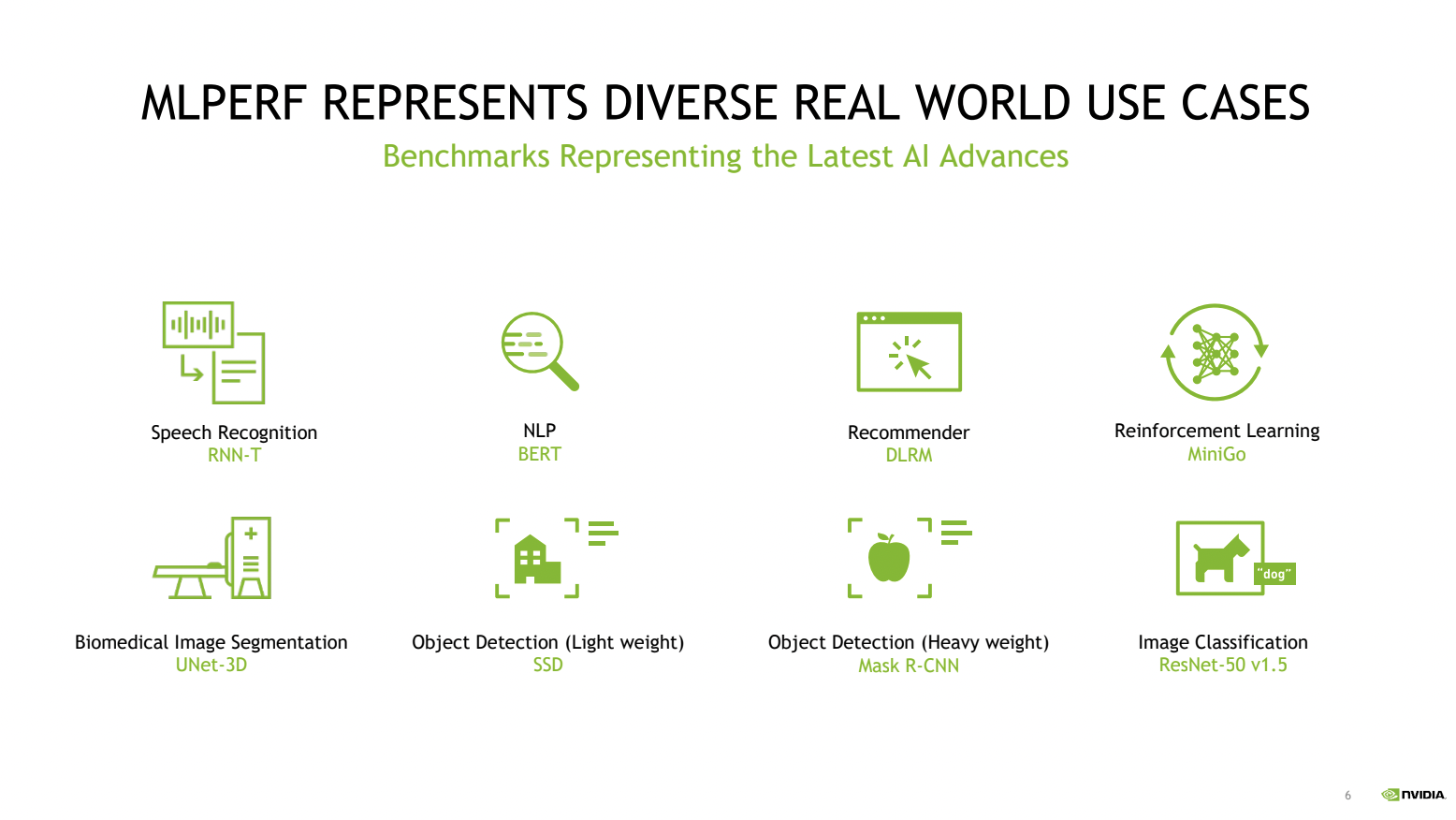

In this iteration of MLPerf, 14 organizations provided submissions and 185 peer-reviewed results were released. Real-world use cases and AI advancements are represented, including speech recognition, object detection, reinforcement learning, NLP, and image classification, among others.

In the last three years since MLPerf started, NVIDIA AI has improved performance by 20x, and compared to the previous round, provided 5x faster performance at scale in 1 year with full stack innovation. NVIDIA AI was the fastest to train at scale, and had the fastest per-chip performance. Some of the new improvements that enabled this performance included:

- CUDA Graphs: Addresses CPU bottlenecks and runs entire training iterations on one GPU.

- CUDA Streams: Improves parallelism by providing fine gained overlap of computations and communications, enhancing the efficiency of parallel processing.

- NCCL and SHARP: Improves GPU and multi node processing and eliminates the need to send data multiple times across different end points and servers.

- MXNet: Improves the efficiency of memory copies for operations like concatenation and display.

Microsoft’s Azure NDm AI00 V4 instance was recognized as the fastest CSP for training AI models, scaling up to 2,048 AI00 GPUs. This performance allows users to train models at top speeds with any of their preferred services or systems.

When considering what the MLPerf results mean in relation to their unique AI model training needs, enterprises should assess their own specific requirements to make an informed purchasing decision. Also, they should include target metrics in their evaluation, including optimizing time to train and data science team’s productivity, time to launch products to the market, and cost of infrastructure.

Giant Model Training

One of the key centers of innovation at MLPerf is giant model training. Some of the aforementioned AI advancements, including the use cases in computer vision and robotics, have been unblocked thanks to giant AI models.

Google, for example, submitted a giant model in the Open category for MLPerf, a very important area of innovation. These models demand very large scale infrastructure and complex software. The majority of AI programs are trained using GPUs, a chip developed for computer graphics but is also ideal for the parallel processing demanded by neural networks. Giant AI models are distributed throughout upwards of hundreds of GPUs that are connected via high-speed GPU fabric and fast networking.

Democratizing the training of giant AI models requires a specialized framework and distributed inference engine for model inference because the models are too large to fit a single GPU. NEMO Megatron and Triton, part of NVIDIA’s software stack, allow enterprises to have the capabilities to train and infer giant models.

Given the growth of AI models, having the computing requirements to radically improve and speed up the scale of AI model training for advanced applications can help support teams of data scientists and AI researchers so they can continuously push AI innovation to new fronts.

Key Ingredients in the Evolution of AI

High performing AI models are a key component of transformation as AI advances and model sizes explode. Enterprises need a technology stack and platform to support scaling and accelerating AI training to ensure that they can empower their data scientists, continuously stay ahead of the competition, and meet the changing architecture requirements of future AI models.

Visit NVIDIA for more information and resources to help organizations succeed at AI training for their real world AI projects.\

By Ronald van Loon

The ‘Cloud Syndicate’ is a mix of short term guest contributors, curated resources and syndication partners covering a variety of interesting technology related topics. Contact us for syndication details on how to connect your technology article or news feed to our syndication network.